Chess AI has revolutionized the game, outsmarting even grandmasters with techniques rooted in advanced algos and math. Let’s explore 4 techniques behind their dominance.

1. Minimax Algorithm: The Decision-Making Engine

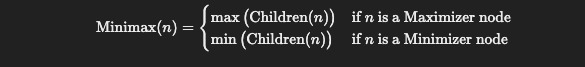

The Minimax algorithm1 is at the heart of most chess AIs, simulating all possible moves. It assumes both players act optimally:

- The Maximizer seeks to maximize their advantage.

- The Minimizer tries to minimize it.

How It Works:

The recursive formula for Minimax is:

Challenges:

The number of possible moves in chess grows exponentially. With a branching factor of approximately 35:

where is the depth of the search.

2. Alpha-Beta Pruning: Smarter Search

To optimize Minimax, Alpha-Beta Pruning2 eliminates moves that cannot influence the outcome. It keeps track of:

: The best score achievable by the Maximizer.

: The best score achievable by the Minimizer.

The Pruning Condition:

When:

the engine stops exploring that branch, saving valuable computational time.

Improved Efficiency:

This technique reduces the complexity of Minimax from:

where is the branching factor, and

is the depth.

3. Heuristics and Evaluation Functions

Since it’s impractical to evaluate every possible position, chess engines rely on evaluation functions3 to approximate the strength of a position.

A Simple Evaluation Function:

Here, represents the material advantage:

where are the numbers of queens, rooks, knights, bishops, and pawns, respectively.

4. Neural Networks and Monte Carlo Tree Search (MCTS)

Modern engines like AlphaZero4 combine deep learning with Monte Carlo Tree Search (MCTS)5 for exceptional performance.

Monte Carlo Tree Search:

MCTS doesn’t evaluate the entire game tree. Instead, it uses random simulations and a mathematical formula to guide its search:

Where:

: Average reward of node

.

: Exploration parameter.

: Total number of simulations for the parent node.

: Simulations for child node

.

Neural Networks:

Instead of relying on handcrafted heuristics, AlphaZero trains a neural network to predict:

- The value of a position.

- The probability distribution of moves.

Final Thoughts

Chess AI combines traditional algos like Minimax and AB Pruning with modern techniques like NNs and MCTS.

For games that rely on randomness e.g. Monopoly 🏡, one might use probability-aware algorithms like expectimax6 or MCTS and long-term optimization strategies like RL.

For games like Poker ♥️ which have imperfect information, randomness and strategic bluffing, one might look into algorithms like counterfactual regret minimization (CFR)7.

Footnotes

- https://dynomight.substack.com/p/chess

- https://www.reddit.com/r/LocalLLaMA/comments/1gmkz9z/what_happens_when_llms_play_chess_and_the/

- https://git.savannah.gnu.org/cgit/chess.git

- https://en.wikipedia.org/wiki/Minimax ↩︎

- https://en.wikipedia.org/wiki/Alpha%E2%80%93beta_pruning ↩︎

- https://en.wikipedia.org/wiki/Evaluation_function ↩︎

- https://github.com/google-deepmind/mctx ↩︎

- https://en.wikipedia.org/wiki/Monte_Carlo_tree_search ↩︎

- https://en.wikipedia.org/wiki/Expectiminimax ↩︎

- https://poker.cs.ualberta.ca/publications/NIPS07-cfr.pdf ↩︎

Leave a Reply